Imagine you’re looking at a city skyline at sunset. Each building reflects the same light differently—some gleam gold, others fade into shadow. If you only measured the average brightness, you’d miss the richness of this contrast. Traditional regression models work much the same way: they focus on the average trend between predictors and a response variable, missing how relationships vary across different points in the distribution. Quantile regression, on the other hand, lets us study the entire skyline—the tall towers and the hidden alleys alike.

Rethinking Prediction: Why Averages Aren’t Enough

In many real-world problems, averages hide valuable details. Suppose a data analyst is studying income versus education. A standard regression might show that more education increases income on average. But what about those at the very top or bottom of the income ladder? Quantile regression steps in to reveal these subtle variations.

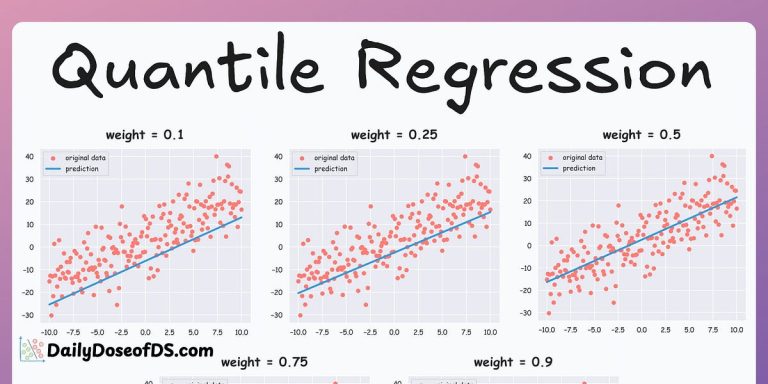

It models how predictors affect different quantiles (for example, the 10th, 50th, or 90th percentile) of the response distribution, not just the mean. It’s like shifting a spotlight along the skyline to study how light behaves across all heights. This makes quantile regression an indispensable tool for those pursuing a Data Scientist course in Coimbatore, where understanding complex, uneven data patterns is key to mastering predictive analytics.

The Core Intuition: Modelling the Conditional Quantiles

At its heart, quantile regression focuses on estimating conditional quantiles rather than conditional means. In simpler terms, it asks: What is the effect of education on the 10th percentile of income? On the 90th percentile?

Mathematically, it minimises an asymmetric loss function that penalises over- and under-predictions differently. This asymmetry makes it robust to outliers and better suited for skewed distributions. Think of it as adjusting the lens focus—not every photo requires centring the subject; sometimes, the edge details tell the real story.

Unlike ordinary least squares (OLS), which assumes homoscedasticity (equal variance), quantile regression embraces heterogeneity. In doing so, it uncovers a fuller picture of the data’s internal structure.

Applications: From Economics to Climate Science

Quantile regression has quietly become the unsung hero across disciplines. Economists use it to analyse income inequality, health researchers apply it to model responses to treatments, and environmental scientists deploy it to study extreme weather patterns.

In finance, for instance, it helps understand how risk factors behave across different levels of return distributions—vital when modelling Value at Risk (VaR). Similarly, in healthcare analytics, it helps clinicians identify which factors most influence patients at the extremes—those least or most responsive to therapy.

Students mastering the craft through a Data Scientist course in Coimbatore often find quantile regression a revelation—it’s where they first realise that “average effects” can be misleading, especially in asymmetric or heavy-tailed datasets.

Quantile Regression vs. Traditional Regression: A Contrast of Perspectives

To appreciate its strength, imagine two artists painting the same scene. The first uses a single brushstroke style for the entire canvas—representing OLS regression, which applies one line of best fit. The second artist layers the painting, using different tones for shadows, midtones, and highlights—similar to quantile regression, which reveals nuance.

While OLS gives one equation representing the “expected” response, quantile regression produces a family of lines, one for each quantile. This allows analysts to observe how relationships shift across different conditions. For instance, a 1% increase in advertising budget may yield minimal returns at the lower sales quantiles but huge benefits at the upper ones.

Moreover, quantile regression does not rely heavily on assumptions of normality or equal variance. It’s a model for the real world—messy, irregular, and beautifully complex.

Implementation: From Theory to Practice

Implementing quantile regression is remarkably accessible. Most modern statistical and machine learning libraries, such as R’s quantreg package or Python’s statsmodels, provide straightforward tools.

The process typically involves:

- Selecting quantiles of interest (e.g., 0.25, 0.5, 0.75).

- Fitting the regression model for each quantile separately.

- Comparing coefficient patterns to understand variations across the response distribution.

For instance, when analysing property prices, the impact of location or proximity to amenities might be far greater for luxury homes (upper quantiles) than for budget homes (lower quantiles). This insight enables more targeted decision-making—an essential skill for advanced data professionals.

Why Quantile Regression Matters for the Modern Data Scientist

In an age where data comes in waves—messy, multi-dimensional, and often skewed—relying solely on mean-based models can lead to oversimplified conclusions. Quantile regression embodies a more democratic view of data. It doesn’t let the extremes be silenced by the middle.

For aspiring analysts and professionals, learning this method is more than an academic exercise. It’s a mindset shift—from summarising data to understanding it in all its unevenness. Institutions offering specialised analytics education have increasingly woven it into their advanced coursework to prepare students for real-world complexity.

Quantile regression equips analysts to:

- Handle non-normal error distributions gracefully.

- Model heteroscedasticity and unequal spread.

- Derive insights tailored to different data segments.

- Communicate findings that reflect true variability, not a simplified average.

Conclusion: Seeing Beyond the Centre Line

If traditional regression is a melody that plays in the middle octaves, quantile regression is a whole symphony. It captures the quiet lows and the dramatic highs, revealing patterns that average mute. For data professionals, it’s an invitation to listen to the entire orchestra of their dataset, not just its dominant tune.

By mastering this method, analysts learn to see data not as a flat surface but as a landscape of elevations and valleys—each revealing its own truth. Quantile regression isn’t just a statistical technique; it’s a way of thinking—one that values diversity in data behaviour and embraces complexity as a source of insight.

In that sense, it’s not only an analytical tool but also a reflection of what every modern analyst should aspire to be—curious, perceptive, and precise.